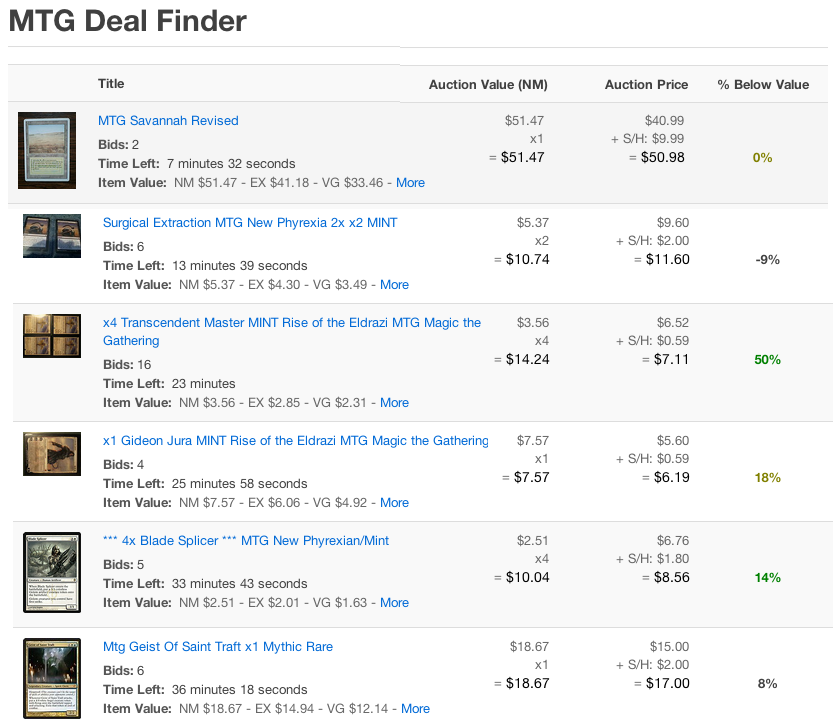

Alright, I’ll admit it. Even after 15 years, I’m still a huge fan of Magic the Gathering. I love the game and I’m a huge collector. So, like any other person looking to collect, I’m on eBay alot. But the problem with eBay is that I’m never quite sure what’s a good price for an item I’m interested in. I’ve done the long, drawn out task of looking through completed listings, but it’s simply not scalable. So, utilizing the WorthMonkey and eBay APIs, I created a “filter” for magic cards ending within the next 4 hours. This filter shows the card auctions alongside their average selling price, so that I can quickly look for deals and know exactly how much I should bid to win. I’m on this tool all day long and if your interested in giving it a try, it can be found here:

Having taken a recent interest in behavioral analytics, I devised a perfect hackathon project. The idea was simple; bring behavioral analytics to the masses by building a super lightweight platform with brain dead simple RESTfull event calls. Once the hooks were in place for any given website, we could monitor the site’s usage in real-time to alert the owner in the event of abhorrent behavior. Given that most website owners’ see their site as a black box, this product would give them huge insight into how their site is being used and abused. In addition, it would provide an audit trail for determining where vulnerabilities exist in the business logic layer. Already teamed up with Ben from Box, I discussed the idea with him and he, having a passion for security as well, was stoked. Later that evening, we begin thinking through the details of the platform.

The morning of the hackathon we pulled together the final details of the project. We set up a server with the usual LAMP stack and memcache for speed. We utilized the Yii framework to facilitate the mundane aspects of creating a new website (Active Record, MVC, etc) and finalized the design of the database in MySQL. We left the designs until last because our main focus was creating an actual platform that would be demo-able in 24 hours. In the end, our final design resembled a standard dashboard with a “twitter” feed of hack alerts.

At 5 pm, the contest began and we immediately set to work on creating our platform. We had loaded up on sugar and caffeine, so we were amped up and ready to roll. Ben took the database implementation, creation of the models and set up of the REST hooks. I took the creation of the AJAX hook code, implementation of the dashboard and hooking the final pieces together.

The implementation of all pieces continued throughout the night with continued doses of caffeine and sugar. We began to see that our concept was too ambitious to complete in the time allotted, so we scaled back to only what was necessary for the demo and to illustrate the feasibility of the idea. This scale back ended up saving us in the end as we finished the implementation with 30 minutes to spare.

The long night was over and the presentations began at 6 pm. With over 35 teams competing, it took over 2 hours to get through all the presentations and there were some fantastic concepts being put forward. Ben and I were still enthralled with our own idea, but began to have doubts if we could truly take any award home. One after another the presentations continued. At 9 pm, it was time to announce the winners.

With great acclaim and response from the judges, our team won first prize to a roomful of applause. We had successfully killed it at the hackathon.

Check out the site here: bluedragonsec.com

Ben Trombley and I after winning the hackathon

I occasionally read through discussion boards that show off various artwork, but often find myself sifting through pages of text till I finally find the images I’m looking for. So rather than continually sift by hand, I build this tool to ease the process.

Here’s how it works. First off, rather than download all images to my server and sort them out there, we simply generate a new “lens” on the existing page, then let the client’s browser verify the images in javascript. Once we have the images loaded, we can remove all images smaller than 120×120 pixels (avatars and such) and display the results in a easy to view format. Lastly, we show the original size artwork scaled down, so that we can easily “right click to save.” The other feature I threw in was the ability to navigate by pages, so if the discussion board your reading supports paging, the extractor should figure it out and let you view pages by using the right and left arrows.

Just got back from the TechCrunch Disrupt Hackathon 2011 and took top place 2 years in a row! Last year I created “Deal Pulse” and took home the prize for “Best Business Award.” This year I had the idea for a weather notification system and called it, “Weather Checker.” 6 teams won the overall contest out of 130, so needless to say, I’m stoked. Read the full article from TechCrunch here.

Need to check if your users are using a “supported” browser for your site? Don’t like how jQuery handles browser version numbers and chrome. Here’s a bit of javascript to make your life easier by putting version numbers in human readable format. Just update the numbers to match what you consider “supportable” for your site.

function is_supported_browser()

{

var userAgent = navigator.userAgent.toLowerCase();

// Is this a version of IE?

if($j.browser.msie)

{

userAgent = $j.browser.version;

version = userAgent.substring(0,userAgent.indexOf('.'));

if ( version >= 8 ) return true;

}

// Is this a version of Chrome?

$j.browser.chrome = /chrome/.test(navigator.userAgent.toLowerCase());

if($j.browser.chrome)

{

userAgent = userAgent.substring(userAgent.indexOf('chrome/') +7);

version = userAgent.substring(0,userAgent.indexOf('.'));

if (version >= 13) return true;

}

// Is this a version of Safari?

if($j.browser.safari)

{

userAgent = userAgent.substring(userAgent.indexOf('version/') +8);

version = userAgent.substring(0,userAgent.indexOf('.'));

if (version >= 5) return true;

}

// Is this a version of firefox?

if($j.browser.mozilla && navigator.userAgent.toLowerCase().indexOf('firefox') != -1)

{

userAgent = userAgent.substring(userAgent.indexOf('firefox/') +8);

version = userAgent.substring(0,userAgent.indexOf('.'));

if (version >= '3.6') return true;

}

return false;

}

So I’ve had quite a few questions about how I do AJAX caching in Titanium Appcelerator. So, here’s my solution.

First, I needed some persistent storage with a very general structure. I could have gone with flat files, but I decided to make use of the built-in SQLite engine, so that I could easily access entries and delete old data.

Database

var db_conn = Ti.Database.open('app_db1');

db_conn.execute('CREATE TABLE IF NOT EXISTS `remote_cache`

(key TEXT, type TEXT, valid_till TEXT, value TEXT,

PRIMARY KEY (key, type))');

Next, I need a wrapper class for my ajax calls. Note the “get” function which is the main function call. Here is the signature and explaination:

get: function (url, params, type, valid_for, callback, skip_cache)

- url : string : the full url to call

- params : object : a simple object with name/value parse for POST variables

- type : string : the cache domain for this call. Usefull for clearing all cache data for a specific domain

- valid_for : date string : the amount of time the cache should remain valid for

- callback : function : the function to call on completion or fail of the request

- skip_cache : boolean : an option to bypass the cache and perform write through

AJAX Class

var ajax =

{

http_conn: Ti.Network.createHTTPClient(),

url:'',

type:'',

params:{},

key:'',

valid_for: '+1 hour',

callback: null,

debug:true,

get: function (url, params, type, valid_for, callback, skip_cache)

{

this.key = MD5(url + '|' + JSON.stringify(params));

this.url = url;

this.params = params;

this.valid_for = valid_for;

this.type = type;

this.callback = callback;

if (ajax.debug) Titanium.API.info('Ajax Call');

if (skip_cache)

{

this.remote_grab();

}

else if (!this.local_grab())

{

this.remote_grab();

}

},

abort : function ()

{

this.http_conn.abort();

},

local_grab: function ()

{

if (ajax.debug) Titanium.API.info('Checking Local key:' + this.key);

var rows = db_conn.execute('SELECT valid_till, value FROM remote_cache WHERE key = ? AND type = ? AND valid_till > datetime(\'now\')', this.key, this.type);//, '', '+this.valid_for+')');//', this.key, 'date(\'now\', '+this.valid_for+')');

var result = false;

if (rows.isValidRow())

{

if (ajax.debug) Titanium.API.info('Local cache found with expiration:' + rows.fieldByName('valid_till'));

var result = JSON.parse(rows.fieldByName('value'));

rows.close();

this.callback(result);

result = true;

}

rows.close();

return result;

},

remote_grab: function ()

{

if (ajax.debug) Titanium.API.info('Calling Remote: ' + this.url + ' - PARAMS: '+ JSON.stringify(this.params));

this.abort();

this.http_conn.setTimeout(10000);

// catch errors

this.http_conn.onerror = function(e)

{

//alert('Call failed');

ajax.callback({result:false});

Ti.API.info(e);

};

// open connection

this.http_conn.open('POST', this.url);

// act on response

var key = this.key;

var valid_for = this.valid_for;

var callback = this.callback;

var type = this.type;

this.http_conn.onload = function()

{

if (ajax.debug) Titanium.API.info('Response from server:' + this.responseText);

if (this.responseText != 'null')

{

var response = JSON.parse(this.responseText);

if (response.result == true || (response && response.length > 0))

{

ajax.update_local(key, type, valid_for, response);

callback(response);

}

else if (response.result == false && response.error && response.error.length > 0)

{

callback({result:false,error:response.error});

}

else

{

callback({result:false,error:'Invalid Result (1)'});

}

}

else

{

callback({result:false,error:'Invalid Result (2)'});

}

};

// Send the HTTP request

this.http_conn.send(this.params);

},

update_local: function (key, type, valid_for, response)

{

if (ajax.debug) Ti.API.info('Updating Cache: KEY: ' + key + ' TYPE: ' + type +' - FOR: '+valid_for+', ' + JSON.stringify(response));

db_conn.execute('DELETE FROM remote_cache WHERE (valid_till <= datetime(\'now\') OR key = ?) AND type = ?', key, type);

db_conn.execute('INSERT INTO remote_cache ( key, type, valid_till, value ) VALUES(?,?,datetime(\'now\',?),?)',key, type, valid_for, JSON.stringify(response));

}

};

Just picked up a 16GB Black iPad 2 today and quickly noticed that it was bleeding yellow light from the bottom. It becomes more apparent on a black background. See video below.

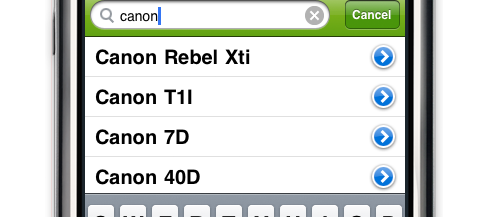

I recently began using Titanium Appcelerator to build an iPhone app for WorthMonkey. One of the features I love in any application, is the ability to search a table using remote data. However, this is very difficult to pull off in Appcelerator, because it’s table search work only on local data. So here’s how I got around that limitation to make a true remote search table.

First, obviously it quite easy to add a search bar to a table, so I did that then attached a listener on the “change” event. But when you attempt to do your remote call here, the built in filter fires first and overlays the current table, so updating the table contents afterward is of no help. So, the trick is to fire the filter event again, AFTER you’ve filled the table. So on line 70, I tell the filter to fire again, and on line 24 I put a check in to make sure we don’t start infinite calls. Enjoy!

//SEARCH BAR

var search = Titanium.UI.createSearchBar({

barColor:'#77B121',

height:43,

hintText:'What\'s It Worth?',

top:0

});

//AUTOCOMPLETE TABLE

var table_data = [];

var autocomplete_table = Titanium.UI.createTableView({

search: search,

scrollable: true,

top:0

});

win.add(autocomplete_table);

//

// SEARCH BAR EVENTS

//

var last_search = null;

search.addEventListener('change', function(e)

{

if (search.value.length > 2 && search.value != last_search)

{

clearTimeout(timers['autocomplete']);

timers['autocomplete'] = setTimeout(function()

{

last_search =search.value;

auto_complete(search.value);

}, 300);

}

return false;

});

function auto_complete(search_term)

{

if (search_term.length > 2)

{

var url = 'YOURURL' + escape( search_term);

var ajax_cache_domain = 'autocomplete';

var params = {};

var cache_for = '+7 days';

ajax.get(url, params, ajax_cache_domain, cache_for, function (response)

{

if (typeof(response) == 'object')

{

var list = response;

// Empty array "data" for our tableview

table_data = [];

for (var i = 0; i < list.length; i++)

{

//Ti.API.info('row data - ' + data[i].value);

var row = Ti.UI.createTableViewRow(

{

height: 40,

title: list[i].value.replace(/^\s+|\s+$/g,""),

hasDetail:true

});

// apply rows to data array

table_data.push(row);

};

// set data into tableView

autocomplete_table.setData(table_data);

search.value = search.value;

}

else

{

alert(response.error);

}

});

}

}

Original Post

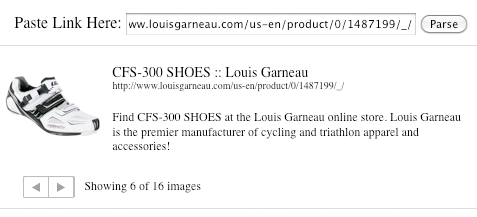

So I wanted to build a link parser like the one on Facebook, but didn’t find one that suited me. So I built one. My code is based off the code found here, but I rewrote much of it to be cleaner and to return JSON rather than HTML.

Code Change – Feb 2nd, 2011

Added some refinements to the cleaning mechanism and greatly speed up image parser.

HTML

<script src="http://ajax.googleapis.com/ajax/libs/jquery/1.4.4/jquery.min.js"></script>

<style>

#atc_bar{width:500px;}

#attach_content{border:1px solid #ccc;padding:10px;margin-top:10px;}

#atc_images {width:100px;height:120px;overflow:hidden;float:left;}

#atc_info {width:350px;float:left;height:100px;text-align:left; padding:10px;}

#atc_title {font-size:14px;display:block;}

#atc_url {font-size:10px;display:block;}

#atc_desc {font-size:12px;}

#atc_total_image_nav{float:left;padding-left:20px}

#atc_total_images_info{float:left;padding:4px 10px;font-size:12px;}

</style>

<br /><br /><br /><br />

<div align="center">

<h1>Parse a Link Like Facebook with PHP and Jquery</h1>

<div id="atc_bar" align="center">

Paste Link Here: <input type="text" name="url" size="40" id="url" value="" />

<input type="button" name="attach" value="Parse" id="attach" />

<input type="hidden" name="cur_image" id="cur_image" />

<div id="loader">

<div align="center" id="atc_loading" style="display:none"><img src="load.gif" alt="Loading" /></div>

<div id="attach_content" style="display:none">

<div id="atc_images"></div>

<div id="atc_info">

<label id="atc_title"></label>

<label id="atc_url"></label>

<br clear="all" />

<label id="atc_desc"></label>

<br clear="all" />

</div>

<div id="atc_total_image_nav" >

<a href="#" id="prev"><img src="prev.png" alt="Prev" border="0" /></a><a href="#" id="next"><img src="next.png" alt="Next" border="0" /></a>

</div>

<div id="atc_total_images_info" >

Showing <span id="cur_image_num">1</span> of <span id="atc_total_images">1</span> images

</div>

<br clear="all" />

</div>

</div>

<br clear="all" />

</div>

</div>

JavaScript

<script>

$(document).ready(function(){

// delete event

$('#attach').bind("click", parse_link);

function parse_link ()

{

if(!isValidURL($('#url').val()))

{

alert('Please enter a valid url.');

return false;

}

else

{

$('#atc_loading').show();

$('#atc_url').html($('#url').val());

$.post("fetch.php?url="+escape($('#url').val()), {}, function(response){

//Set Content

$('#atc_title').html(response.title);

$('#atc_desc').html(response.description);

$('#atc_price').html(response.price);

$('#atc_total_images').html(response.total_images);

$('#atc_images').html(' ');

$.each(response.images, function (a, b)

{

$('#atc_images').append('<img src="'+b.img+'" width="100" id="'+(a+1)+'">');

});

$('#atc_images img').hide();

//Flip Viewable Content

$('#attach_content').fadeIn('slow');

$('#atc_loading').hide();

//Show first image

$('img#1').fadeIn();

$('#cur_image').val(1);

$('#cur_image_num').html(1);

// next image

$('#next').unbind('click');

$('#next').bind("click", function(){

var total_images = parseInt($('#atc_total_images').html());

if (total_images > 0)

{

var index = $('#cur_image').val();

$('img#'+index).hide();

if(index < total_images)

{

new_index = parseInt(index)+parseInt(1);

}

else

{

new_index = 1;

}

$('#cur_image').val(new_index);

$('#cur_image_num').html(new_index);

$('img#'+new_index).show();

}

});

// prev image

$('#prev').unbind('click');

$('#prev').bind("click", function(){

var total_images = parseInt($('#atc_total_images').html());

if (total_images > 0)

{

var index = $('#cur_image').val();

$('img#'+index).hide();

if(index > 1)

{

new_index = parseInt(index)-parseInt(1);;

}

else

{

new_index = total_images;

}

$('#cur_image').val(new_index);

$('#cur_image_num').html(new_index);

$('img#'+new_index).show();

}

});

});

}

};

});

function isValidURL(url)

{

var RegExp = /(ftp|http|https):\/\/(\w+:{0,1}\w*@)?(\S+)(:[0-9]+)?(\/|\/([\w#!:.?+=&%@!\-\/]))?/;

if(RegExp.test(url)){

return true;

}else{

return false;

}

}

</script>

PHP

$url = urldecode($_REQUEST['url']);

$url = checkValues($url);

$return_array = array();

$base_url = substr($url,0, strpos($url, "/",8));

$relative_url = substr($url,0, strrpos($url, "/")+1);

// Get Data

$cc = new cURL();

$string = $cc->get($url);

$string = str_replace(array("\n","\r","\t",'</span>','</div>'), '', $string);

$string = preg_replace('/(<(div|span)\s[^>]+\s?>)/', '', $string);

if (mb_detect_encoding($string, "UTF-8") != "UTF-8")

$string = utf8_encode($string);

// Parse Title

$nodes = extract_tags( $string, 'title' );

$return_array['title'] = trim($nodes[0]['contents']);

// Parse Base

$base_override = false;

$base_regex = '/<base[^>]*'.'href=[\"|\'](.*)[\"|\']/Ui';

preg_match_all($base_regex, $string, $base_match, PREG_PATTERN_ORDER);

if(strlen($base_match[1][0]) > 0)

{

$base_url = $base_match[1][0];

$base_override = true;

}

// Parse Description

$return_array['description'] = '';

$nodes = extract_tags( $string, 'meta' );

foreach($nodes as $node)

{

if (strtolower($node['attributes']['name']) == 'description')

$return_array['description'] = trim($node['attributes']['content']);

}

// Parse Images

$images_array = extract_tags( $string, 'img' );

$images = array();

for ($i=0;$i<=sizeof($images_array);$i++)

{

$img = trim(@$images_array[$i]['attributes']['src']);

$width = preg_replace("/[^0-9.]/", '', $images_array[$i]['attributes']['width']);

$height = preg_replace("/[^0-9.]/", '', $images_array[$i]['attributes']['height']);

$ext = trim(pathinfo($img, PATHINFO_EXTENSION));

if($img && $ext != 'gif')

{

if (substr($img,0,7) == 'http://')

;

else if (substr($img,0,1) == '/' || $base_override)

$img = $base_url . $img;

else

$img = $relative_url . $img;

if ($width == '' && $height == '')

{

$details = @getimagesize($img);

if(is_array($details))

{

list($width, $height, $type, $attr) = $details;

}

}

$width = intval($width);

$height = intval($height);

if ($width > 199 || $height > 199 )

{

if (

(($width > 0 && $height > 0 && (($width / $height) < 3) && (($width / $height) > .2))

|| ($width > 0 && $height == 0 && $width < 700)

|| ($width == 0 && $height > 0 && $height < 700)

)

&& strpos($img, 'logo') === false )

{

$images[] = array("img" => $img, "width" => $width, "height" => $height, 'area' => ($width * $height),'offset' => $images_array[$i]['offset']);

}

}

}

}

$return_array['images'] = array_values(($images));

$return_array['total_images'] = count($return_array['images']);

header('Cache-Control: no-cache, must-revalidate');

header('Expires: Mon, 26 Jul 1997 05:00:00 GMT');

header('Content-type: application/json');

echo json_encode($return_array);

exit;